AI

Enhancing LLM Reasoning Skills through Active Pre-Training Thinking Strategies

Revolutionizing Language Model Training with Reinforcement Learning Pre-Training

A groundbreaking new technique has been developed by researchers at Nvidia that transforms the way large language models (LLMs) learn to reason. The method, known as reinforcement learning pre-training (RLP), introduces reinforcement learning into the initial training phase rather than saving it for later stages.

This innovative approach encourages models to exhibit independent thinking behavior earlier in the pre-training process, enabling them to “think for themselves before predicting what comes next,” as stated by the researchers in their paper.

The Impact of RLP on Reasoning Tasks

By training models to reason on plain text without external verifiers, those trained with RLP demonstrate significant enhancements in their ability to tackle complex reasoning tasks. This sets the stage for more capable and adaptable AI solutions for real-world applications.

The Traditional LLM Training Cycle

Conventionally, large language models undergo pre-training on extensive text datasets using a “next-token prediction” objective. They are tasked with predicting the next word in a given text, thereby learning grammar, facts, and basic associations.

In the subsequent post-training phase, models typically acquire complex reasoning skills such as chain-of-thought (CoT) through supervised fine-tuning or reinforcement learning from human feedback. However, this sequential process does not align with human comprehension, as it lacks parallel integration of input with prior knowledge.

How RLP Enhances Pre-Training

RLP revolutionizes the training process by treating CoT generation as an action preceding token prediction. At each step, the model generates an internal reasoning chain before predicting the next word, receiving rewards based on the effectiveness of its thought process in improving prediction accuracy.

By rewarding valuable thoughts that aid in prediction, RLP trains models to think usefully on unstructured datasets, teaching them when to engage in deeper reasoning. This foundational approach complements later fine-tuning stages, amplifying their effectiveness.

RLP Advantages and Achievements

Experiments with Qwen3-1.7B and Nemotron-Nano-12B models have showcased RLP’s superiority in math and science reasoning benchmarks, outperforming conventionally trained models in reasoning-heavy tasks. This improved reasoning capability holds promising implications for enterprises in tasks like financial analysis and document summarization.

Furthermore, RLP demonstrates scalability and versatility by extracting reasoning signals from general-purpose web data, achieving substantial improvements over heavily trained baselines with minimal data usage.

Building a Smarter AI Foundation

RLP signifies a shift towards a more robust and structured approach to pre-training, laying the groundwork for AI models that learn to reason effectively from the outset. This new training paradigm combines next-token prediction with reinforcement-style objectives to foster deeper thinking in models early on.

As we delve deeper into reinforcement learning dynamics during pre-training, the potential for scaling models in reasoning capabilities becomes increasingly apparent. RLP opens a new avenue for enhancing how models learn to reason, setting the stage for more active, curious, and efficient learning processes.

-

Video Games2 days ago

Video Games2 days agoGoku Takes on the Dragon Ball FighterZ Arena

-

Video Games3 days ago

Video Games3 days agoTekken 8: Rise of the Shadows

-

Amazon3 days ago

Amazon3 days agoNeil Young Takes a Stand: Pulling Music from Amazon in Protest of Jeff Bezos’ Support for Trump

-

Cars1 day ago

Cars1 day agoRevving into the Future: Ferrari’s Plan to Unleash 20 New Models, Including Electric Vehicles, by 2030

-

Tech News3 days ago

Tech News3 days agoSamsung Galaxy UI 8: Embracing the Big Free AI Upgrade

-

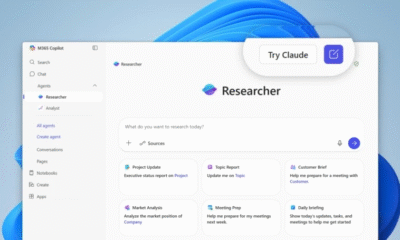

Microsoft1 day ago

Microsoft1 day agoMicrosoft Integrates Anthropic’s Claude AI Models into 365 Copilot: A Deepening Relationship with OpenAI

-

Security3 days ago

Security3 days agoCritical Vulnerability Exposed: Oracle EBS Targeted in Recent Cyber Attacks by Cl0p Hackers

-

Microsoft3 days ago

Microsoft3 days agoEnhanced Copilot Features: Creating Office Documents and Gmail Integration