AI

Unlocking the Power of AI with NVIDIA Spectrum-X: Revolutionizing Data Centre Efficiency

Meta and Oracle Enhance AI Data Centers with NVIDIA Spectrum-X Ethernet Networking Switches

Meta and Oracle are upgrading their AI data centers with NVIDIA’s Spectrum-X Ethernet networking switches, specifically designed to meet the increasing demands of large-scale AI systems. The adoption of Spectrum-X by both companies is part of an open networking framework aimed at enhancing AI training efficiency and expediting deployment across extensive compute clusters.

NVIDIA’s founder and CEO, Jensen Huang, emphasized how trillion-parameter models are revolutionizing data centers into “giga-scale AI factories.” Spectrum-X serves as the “nervous system” that connects millions of GPUs to train the most extensive models ever constructed.

Oracle intends to implement Spectrum-X Ethernet within its Vera Rubin architecture to establish large-scale AI factories. Mahesh Thiagarajan, Executive Vice President of Oracle Cloud Infrastructure, highlighted that this new setup will enable the company to more effectively connect millions of GPUs, facilitating quicker training and deployment of new AI models for customers.

On the other hand, Meta is expanding its AI infrastructure by integrating Spectrum-X Ethernet switches into the Facebook Open Switching System (FBOSS), their proprietary platform for managing network switches at scale. Gaya Nagarajan, Meta’s Vice President of Networking Engineering, emphasized the necessity for their next-generation network to be open and efficient to support increasingly larger AI models and cater to billions of users.

Constructing Adaptable AI Systems

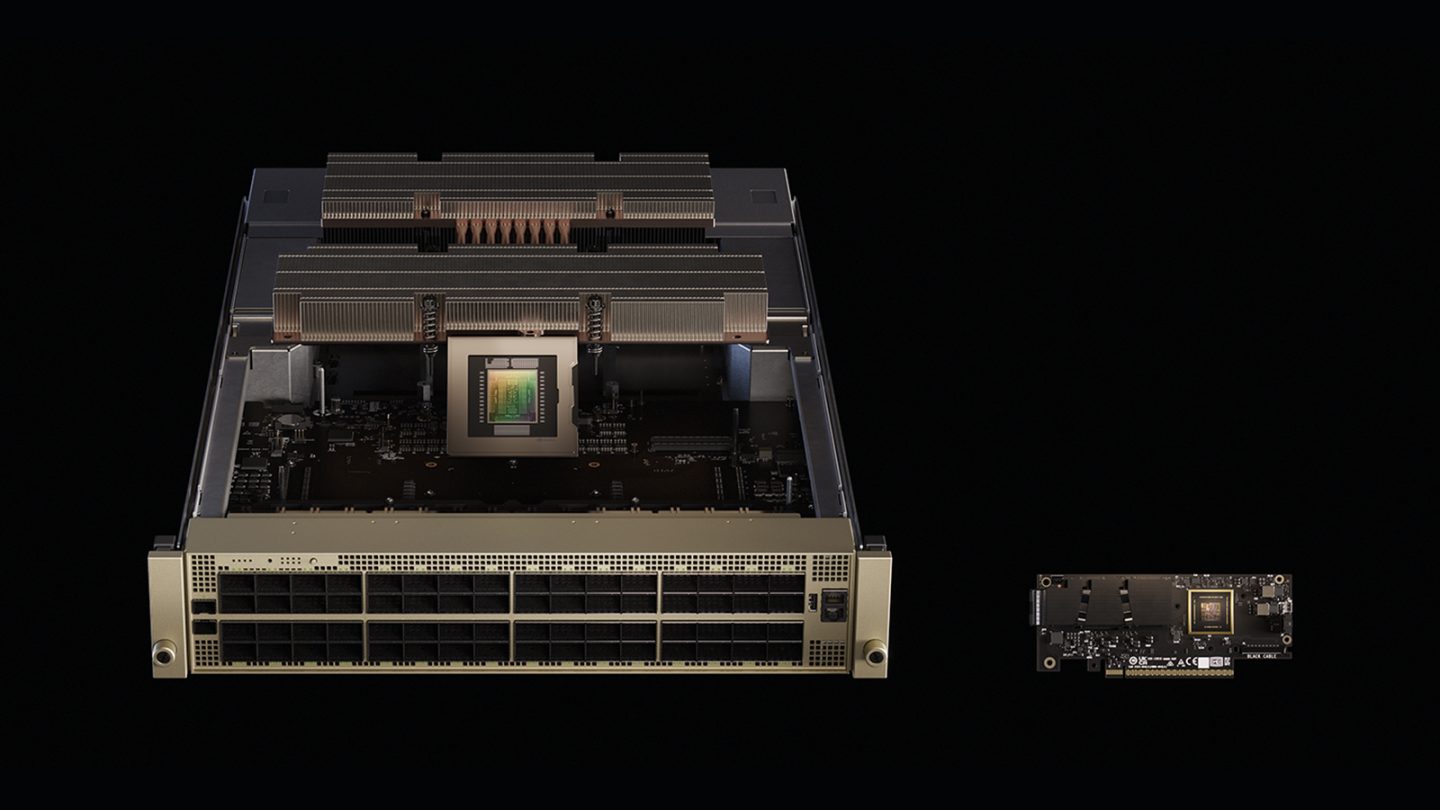

Joe DeLaere, who heads NVIDIA’s Accelerated Computing Solution Portfolio for Data Centers, stressed the importance of flexibility as data centers become more intricate. He explained that NVIDIA’s MGX system offers a modular, building-block design that allows partners to combine various CPUs, GPUs, storage, and networking components as required.

The system also promotes interoperability, enabling organizations to utilize the same design across multiple hardware generations. DeLaere mentioned that this approach provides flexibility, accelerates time to market, and ensures future readiness.

As AI models increase in size, power efficiency emerges as a crucial challenge for data centers. DeLaere outlined NVIDIA’s efforts to enhance energy use and scalability “from chip to grid,” collaborating closely with power and cooling vendors to maximize performance per watt.

One notable initiative is the transition to 800-volt DC power delivery, which reduces heat loss and boosts efficiency. Additionally, the introduction of power-smoothing technology aims to minimize spikes on the electrical grid, potentially reducing maximum power requirements by up to 30%, thereby allowing for increased compute capacity within the same physical footprint.

Scaling Up, Out, and Across

NVIDIA’s MGX system plays a crucial role in scaling data centers. Gilad Shainer, NVIDIA’s Senior Vice President of Networking, highlighted that MGX racks host both compute and switching components, supporting NVLink for scale-up connectivity and Spectrum-X Ethernet for scale-out expansion.

Shainer also mentioned that MGX can interconnect multiple AI data centers as a unified system—essential for companies like Meta to support extensive distributed AI training operations. Depending on the distance between sites, connections can be established through dark fiber or additional MGX-based switches, facilitating high-speed connectivity across regions.

Meta’s adoption of Spectrum-X in their AI infrastructure underscores the growing significance of open networking. Shainer indicated that the company will utilize FBOSS as its network operating system, while Spectrum-X is compatible with several other systems through partnerships, including Cumulus, SONiC, and Cisco’s NOS. This flexibility allows hyperscalers and enterprises to standardize their infrastructure using systems that best suit their environments.

Expanding the AI Ecosystem

NVIDIA views Spectrum-X as a tool to enhance the efficiency and accessibility of AI infrastructure across various scales. Shainer highlighted that the Ethernet platform was specifically designed for AI workloads such as training and inference, offering up to 95% effective bandwidth and surpassing traditional Ethernet by a significant margin.

Collaborations with companies like Cisco, xAI, Meta, and Oracle Cloud Infrastructure are instrumental in broadening the reach of Spectrum-X to diverse environments, from hyperscalers to enterprises.

Preparing for Vera Rubin and Beyond

DeLaere disclosed that NVIDIA’s upcoming Vera Rubin architecture is slated for commercial availability in the second half of 2026, with the Rubin CPX product expected to launch by the year’s end. Both solutions will work in conjunction with Spectrum-X networking and MGX systems to support the next generation of AI factories.

He clarified that Spectrum-X and XGS share the same core hardware but leverage different algorithms for varying distances—Spectrum-X for intra-data center communication and XGS for inter-data center communication. This strategy minimizes latency and enables multiple sites to function collectively as a massive AI supercomputer.

Collaboration Across the Power Chain

To facilitate the 800-volt DC transition, NVIDIA is collaborating with partners across the entire power chain, from the chip level to the grid. The company is working with Onsemi and Infineon on power components, alongside Delta, Flex, and Lite-On at the rack level, and in partnership with Schneider Electric and Siemens on data center designs. A technical white paper outlining this approach will be released at the OCP Summit.

DeLaere described this comprehensive approach as a “holistic design from silicon to power delivery,” ensuring seamless operation of all systems in high-density AI environments operated by companies like Meta and Oracle.

Performance Advantages for Hyperscalers

Spectrum-X Ethernet was purpose-built for distributed computing and AI workloads. Shainer emphasized that it offers features such as adaptive routing and telemetry-based congestion control to eliminate network bottlenecks and deliver consistent performance. These capabilities enable higher training and inference speeds while supporting multiple workloads simultaneously without interference.

Shainer highlighted that Spectrum-X is the only Ethernet technology proven to scale effectively at extreme levels, assisting organizations in optimizing performance and maximizing returns on their GPU investments. For hyperscalers like Meta, this scalability is crucial in managing the escalating demands of AI training and ensuring efficient infrastructure.

Hardware and Software Synergy

While NVIDIA’s primary focus is on hardware, DeLaere emphasized the significance of software optimization. The company continues to enhance performance through co-design, aligning hardware and software development to maximize efficiency for AI systems.

NVIDIA is investing in FP4 kernels, frameworks like Dynamo and TensorRT-LLM, and algorithms such as speculative decoding to enhance throughput and AI model performance. These ongoing enhancements ensure that systems like Blackwell consistently deliver superior results over time for hyperscalers like Meta that rely on reliable AI performance.

Networking for the Trillion-Parameter Era

NVIDIA’s Spectrum-X platform, encompassing Ethernet switches and SuperNICs, represents the company’s first Ethernet system specifically tailored for AI workloads. It is designed to efficiently connect millions of GPUs while maintaining predictable performance across AI data centers.

With congestion-control technology achieving up to 95% data throughput, Spectrum-X represents a significant advancement over standard Ethernet, which typically achieves around 60% due to flow collisions. Additionally, its XGS technology supports long-distance links between AI data centers, connecting facilities across regions into unified “AI super factories.”

By integrating NVIDIA’s full stack—GPUs, CPUs, NVLink, and software—Spectrum-X delivers the consistent performance necessary to support trillion-parameter models and the next wave of generative AI workloads.

-

Video Games2 days ago

Video Games2 days agoTekken 8: Rise of the Shadows

-

Video Games1 day ago

Video Games1 day agoGoku Takes on the Dragon Ball FighterZ Arena

-

Amazon2 days ago

Amazon2 days agoNeil Young Takes a Stand: Pulling Music from Amazon in Protest of Jeff Bezos’ Support for Trump

-

Tech News2 days ago

Tech News2 days agoSamsung Galaxy UI 8: Embracing the Big Free AI Upgrade

-

Security2 days ago

Security2 days agoCritical Vulnerability Exposed: Oracle EBS Targeted in Recent Cyber Attacks by Cl0p Hackers

-

Apple2 days ago

Apple2 days agoExploring the Dystopian Realms of Pluribus: An Apple Original Series Trailer

-

Microsoft2 days ago

Microsoft2 days agoEnhanced Copilot Features: Creating Office Documents and Gmail Integration

-

AI1 day ago

AI1 day agoOracle’s Next-Gen Enterprise AI Services Powered by NVIDIA’s Cutting-Edge GPUs