AI

Revolutionizing Memory Architecture for Agentic AI Scale-Up

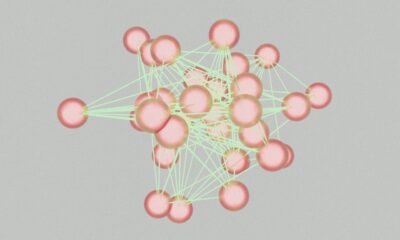

Agentic AI is a significant advancement from basic chatbots, evolving towards complex workflows. Scaling this technology requires a new memory architecture to handle the increasing computational costs of remembering history.

With foundation models growing to trillions of parameters and context windows expanding to millions of tokens, the challenge lies in managing the memory efficiently. Organisations deploying these systems are facing a bottleneck due to the overwhelming volume of “long-term memory” known as Key-Value (KV) cache.

The existing infrastructure presents a dilemma of either storing inference context in expensive GPU memory (HBM) or using slow general-purpose storage. This choice becomes unsustainable for large contexts, leading to latency issues in real-time interactions.

To tackle this challenge, NVIDIA has introduced the Inference Context Memory Storage (ICMS) platform within its Rubin architecture. This platform offers a new storage tier specifically designed to handle the dynamic nature of AI memory.

NVIDIA’s CEO, Huang, emphasizes the shift in AI towards intelligent collaborators with a deep understanding of the physical world and the ability to retain both short- and long-term memory.

The operational challenge arises from the behavior of transformer-based models, which store previous states in the KV cache to avoid recomputing the conversation history for each new word. This cache is crucial for immediate performance but does not require the durability guarantees of enterprise file systems.

The current hierarchy of storage, from GPU HBM to shared storage, is becoming inefficient as context moves across tiers, leading to latency and increased power costs.

A New Memory Tier for the AI Factory

The industry response involves introducing a purpose-built layer into the storage hierarchy. The ICMS platform establishes a new tier (G3.5) designed for gigascale inference, providing shared capacity per pod to enhance the scaling of agentic AI.

By integrating storage directly into the compute pod and utilizing NVIDIA BlueField-4 data processor, the platform offloads context data management from the host CPU, resulting in higher throughput and energy efficiency.

Integrating the Data Plane

Implementing this architecture requires a shift in how IT teams view storage networking. The ICMS platform relies on NVIDIA Spectrum-X Ethernet for high-bandwidth connectivity to treat flash storage as local memory.

Frameworks like NVIDIA Dynamo and NIXL manage the movement of KV blocks between tiers, ensuring that the correct context is loaded into GPU or host memory when needed.

Major storage vendors are aligning with this architecture, building platforms with BlueField-4 to be available in the near future.

Redefining Infrastructure for Scaling Agentic AI

Adopting a dedicated context memory tier impacts capacity planning and datacentre design, allowing for better efficiency and scalability for agentic AI.

- Reclassifying data: Recognizing KV cache as a unique data type, distinct from compliance data, enables better management of storage resources.

- Orchestration maturity: Intelligent workload placement and topology-aware orchestration are crucial for optimizing performance.

- Power density: Increasing compute density requires careful cooling and power distribution planning for efficient operations.

Introducing a specialized context tier enables enterprises to separate model memory growth from the cost of GPU HBM, enhancing scalability and reducing costs for serving complex queries.

As organisations plan their infrastructure investments, evaluating the memory hierarchy efficiency will play a critical role in maximizing AI performance.

See also: Exploring the AI chip wars and supply chain reality for enterprise leaders.

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo events happening globally, part of the TechEx series.

AI News is brought to you by TechForge Media. Discover upcoming enterprise technology events and webinars here.

-

Facebook4 months ago

Facebook4 months agoEU Takes Action Against Instagram and Facebook for Violating Illegal Content Rules

-

Facebook4 months ago

Facebook4 months agoWarning: Facebook Creators Face Monetization Loss for Stealing and Reposting Videos

-

Facebook4 months ago

Facebook4 months agoFacebook Compliance: ICE-tracking Page Removed After US Government Intervention

-

Facebook4 months ago

Facebook4 months agoInstaDub: Meta’s AI Translation Tool for Instagram Videos

-

Facebook2 months ago

Facebook2 months agoFacebook’s New Look: A Blend of Instagram’s Style

-

Facebook2 months ago

Facebook2 months agoFacebook and Instagram to Reduce Personalized Ads for European Users

-

Facebook2 months ago

Facebook2 months agoReclaim Your Account: Facebook and Instagram Launch New Hub for Account Recovery

-

Apple4 months ago

Apple4 months agoMeta discontinues Messenger apps for Windows and macOS