Inovation

Multi-functional Robot Backpack Drone: Revolutionizing Emergency Response

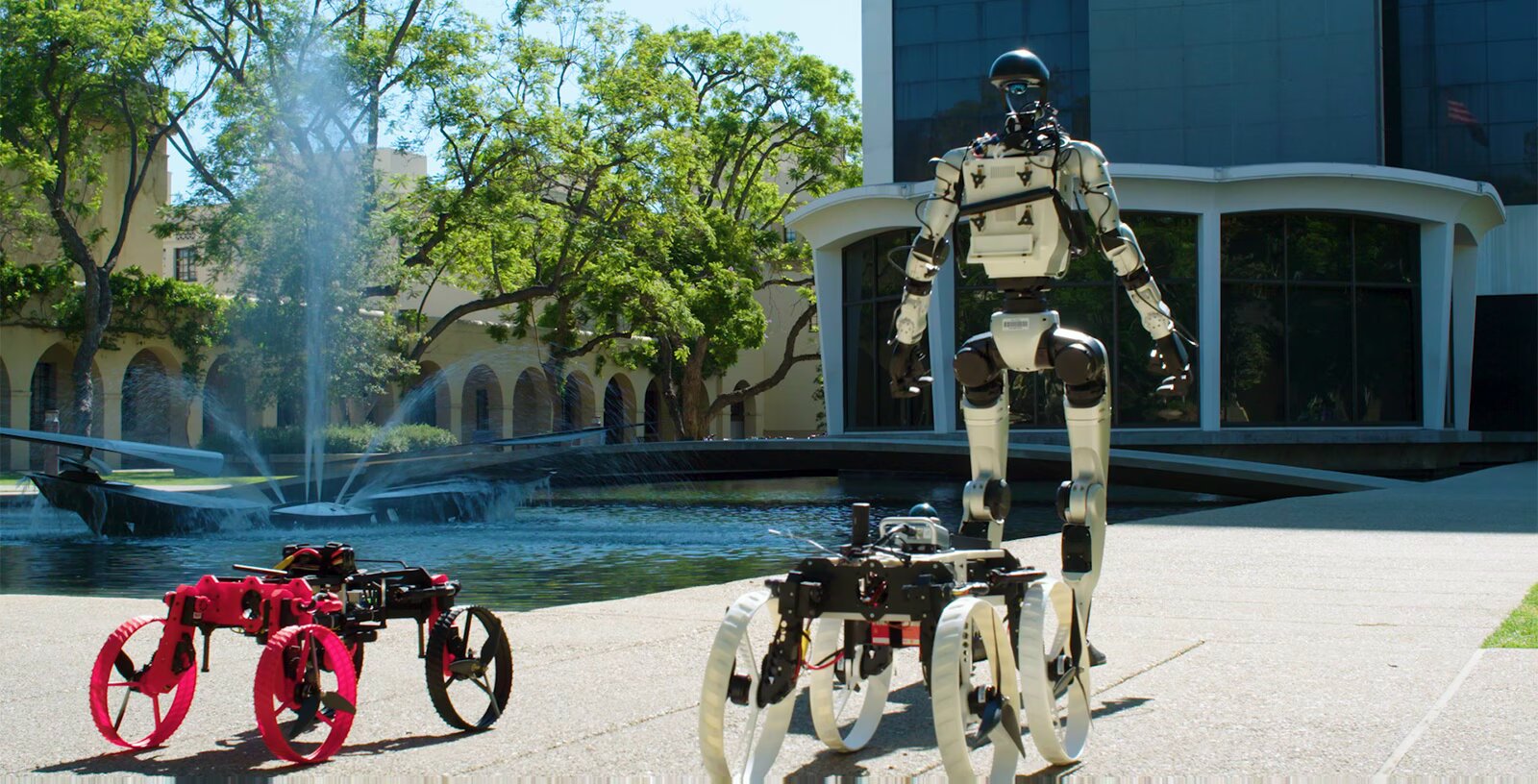

Introducing X1: The world’s first multirobot system that integrates a humanoid robot with a transforming drone that can launch off the humanoid’s back, and later, drive away.

The new multimodal system is one product of a three-year collaboration between Caltech’s Center for Autonomous Systems and Technologies (CAST) and the Technology Innovation Institute (TII) in Abu Dhabi, United Arab Emirates. The robotic system demonstrates the kind of innovative and forward-thinking projects that are possible with the combined global expertise of the collaborators in autonomous systems, artificial intelligence, robotics, and propulsion systems.

“Right now, robots can fly, robots can drive, and robots can walk. Those are all great in certain scenarios,” says Aaron Ames, the director and Booth-Kresa Leadership Chair of CAST and the Bren Professor of Mechanical and Civil Engineering, Control and Dynamical Systems, and Aerospace at Caltech. “But how do we take those different locomotion modalities and put them together into a single package, so we can excel from the benefits of all these while mitigating the downfalls that each of them have?”

Testing the capability of the X1 system, the team recently conducted a demonstration on Caltech’s campus. The demo was based on the following premise: Imagine that there is an emergency somewhere on campus, creating the need to quickly get autonomous agents to the scene. For the test, the team modified an off-the-shelf Unitree G1 humanoid such that it could carry M4, Caltech’s multimodal robot that can both fly and drive, as if it were a backpack.

The demo started with the humanoid in Gates–Thomas Laboratory. It walked through Sherman Fairchild Library and went outside to an elevated spot where it could safely deploy M4. The humanoid then bent forward at the waist, allowing M4 to launch in its drone mode. M4 then landed and transformed into driving mode to efficiently continue on wheels toward its destination.

Before reaching that destination, however, M4 encountered the Turtle Pond, so it switched back to drone mode, quickly flew over the obstacle, and made its way to the site of the “emergency” near Caltech Hall.

The humanoid and a second M4 eventually joined forces with the first responder. The collaboration between Caltech, Ames, and TII aims to create a unified system with various functionalities, combining expertise in flying and driving robots, locomotion, autonomy, sensing, and morphing robot design. The team is working on enhancing the system with sensors, model-based algorithms, and machine learning-driven autonomy for real-time navigation and adaptation to surroundings. TII’s Saluki technology provides secure flight control and onboard computing for the new M4 version. The goal is to enable robots to move autonomously by integrating sensors like lidar and cameras to understand their environment and navigate effectively. Ames emphasizes the importance of developing robots that can perform actions without human references to deploy them successfully in complex real-world scenarios.

-

Facebook4 months ago

Facebook4 months agoEU Takes Action Against Instagram and Facebook for Violating Illegal Content Rules

-

Facebook4 months ago

Facebook4 months agoWarning: Facebook Creators Face Monetization Loss for Stealing and Reposting Videos

-

Facebook4 months ago

Facebook4 months agoFacebook Compliance: ICE-tracking Page Removed After US Government Intervention

-

Facebook4 months ago

Facebook4 months agoInstaDub: Meta’s AI Translation Tool for Instagram Videos

-

Facebook2 months ago

Facebook2 months agoFacebook’s New Look: A Blend of Instagram’s Style

-

Facebook2 months ago

Facebook2 months agoFacebook and Instagram to Reduce Personalized Ads for European Users

-

Facebook2 months ago

Facebook2 months agoReclaim Your Account: Facebook and Instagram Launch New Hub for Account Recovery

-

Apple4 months ago

Apple4 months agoMeta discontinues Messenger apps for Windows and macOS