AI

Unlocking the Power of Sparse Models: A Breakthrough in Neural Network Debugging

OpenAI researchers are currently exploring a novel approach to developing neural networks aimed at enhancing the understandability, debuggability, and governance of AI models. The use of sparse models can offer enterprises a clearer insight into the decision-making process of these models.

Understanding how models make decisions, a key advantage of reasoning models for businesses, can establish a level of trust when utilizing AI models for insights.

OpenAI’s method involves evaluating models not based on post-training performance but on incorporating interpretability and understanding through sparse circuits.

The complexity of AI models often leads to opacity, necessitating the creation of workarounds to gain a better grasp of model behavior.

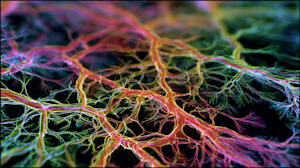

According to OpenAI, neural networks, while powering advanced AI systems, remain challenging to comprehend due to their learning process involving billions of internal connections. The resulting intricate web of connections poses a decipherability challenge for humans.

To enhance interpretability, OpenAI explored an architecture that trains untangled neural networks, simplifying their understanding. By training language models with a similar architecture to existing models like GPT-2, they achieved improved interpretability.

The Significance of Interpretability

OpenAI emphasizes the importance of understanding how models operate to gain insights into their decision-making process, which has real-world implications.

The company defines interpretability as methods that help in understanding why a model produces a specific output. It distinguishes between chain-of-thought interpretability and mechanistic interpretability, with a focus on improving the latter.

OpenAI’s efforts to enhance mechanistic interpretability aim to offer a more comprehensive explanation of the model’s behavior, despite being more challenging.

Better interpretability enables improved oversight and early detection of discrepancies between the model’s behavior and established policies.

OpenAI acknowledges that enhancing mechanistic interpretability is a significant endeavor, but research on sparse networks has shown promising results.

Untangling the Model

To simplify the intricate connections within a model, OpenAI employed a process of cutting out most connections and creating more orderly groupings. This approach involved circuit tracing and pruning to isolate the nodes and weights responsible for behaviors.

The team’s efforts in pruning weight-sparse models resulted in significantly smaller circuits compared to dense models, enhancing disentanglement and localizability of behaviors.

Enhanced Training of Small Models

While OpenAI succeeded in developing sparse models for better understandability, these models remain smaller than most enterprise-used foundation models. However, advancements in interpretability will benefit frontier models like GPT-5.1 in the future.

Other AI model developers, such as Anthropic and Meta, are also working on understanding how reasoning models make decisions, reflecting the industry’s focus on model transparency and trust.

As enterprises increasingly rely on AI models for critical decisions, research into understanding model behavior is crucial for building trust and clarity in utilizing these models effectively.

-

Facebook4 months ago

Facebook4 months agoEU Takes Action Against Instagram and Facebook for Violating Illegal Content Rules

-

Facebook4 months ago

Facebook4 months agoWarning: Facebook Creators Face Monetization Loss for Stealing and Reposting Videos

-

Facebook4 months ago

Facebook4 months agoFacebook Compliance: ICE-tracking Page Removed After US Government Intervention

-

Facebook4 months ago

Facebook4 months agoInstaDub: Meta’s AI Translation Tool for Instagram Videos

-

Facebook2 months ago

Facebook2 months agoFacebook’s New Look: A Blend of Instagram’s Style

-

Facebook2 months ago

Facebook2 months agoFacebook and Instagram to Reduce Personalized Ads for European Users

-

Facebook2 months ago

Facebook2 months agoReclaim Your Account: Facebook and Instagram Launch New Hub for Account Recovery

-

Apple4 months ago

Apple4 months agoMeta discontinues Messenger apps for Windows and macOS