Security

Unveiling the Dark Side of AI: How Platforms Can Facilitate Covert Malware Communication

AI assistants like Grok and Microsoft Copilot, equipped with web browsing and URL-fetching capabilities, have the potential to be misused for command-and-control (C2) activities.

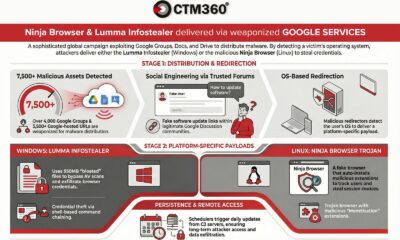

Recent research conducted by cybersecurity firm Check Point uncovered that malicious actors could leverage AI services to act as an intermediary for communication between the C2 server and the targeted system.

By exploiting this method, attackers can issue commands to compromised machines and retrieve sensitive data from them.

Check Point’s researchers developed a proof-of-concept to demonstrate this process and shared their findings with Microsoft and xAI.

Instead of malware directly connecting to an attacker’s C2 server, Check Point proposed a novel approach where malware communicates with an AI web interface. This interface then fetches URLs controlled by the attacker and relays the responses back to the malware.

In this scenario, the malware interacts with the AI service using the WebView2 component in Windows 11. Even if this component is missing on the target system, the attacker can embed it within the malware.

WebView2 allows developers to display web content in native desktop applications, eliminating the need for a full-fledged browser.

Check Point’s researchers crafted a C++ program that opens a WebView pointing to either Grok or Copilot. This setup enables attackers to send instructions to the AI assistants, including commands to execute or data extraction from compromised machines.

The AI service responds with embedded instructions that can be modified by the attacker. The malware then extracts and processes these instructions from the AI’s output.

Through this process, a two-way communication channel is established via the AI service, which may evade detection by traditional security measures.

Check Point’s PoC, tested on Grok and Microsoft Copilot, does not require account credentials or API keys for the AI services, making it harder to trace and block.

The researchers highlight that while AI platforms have safeguards to prevent malicious activities, these can be circumvented by encrypting data into complex forms.

They argue that using AI as a C2 proxy is just one method of exploiting AI services, with other possibilities including strategic decision-making on target selection and operational tactics.

BleepingComputer has reached out to Microsoft for clarification on the vulnerability of Copilot and the preventive measures in place. Updates will be provided as soon as they are available.

-

Facebook4 months ago

Facebook4 months agoEU Takes Action Against Instagram and Facebook for Violating Illegal Content Rules

-

Facebook4 months ago

Facebook4 months agoWarning: Facebook Creators Face Monetization Loss for Stealing and Reposting Videos

-

Facebook4 months ago

Facebook4 months agoFacebook Compliance: ICE-tracking Page Removed After US Government Intervention

-

Facebook4 months ago

Facebook4 months agoInstaDub: Meta’s AI Translation Tool for Instagram Videos

-

Facebook2 months ago

Facebook2 months agoFacebook’s New Look: A Blend of Instagram’s Style

-

Facebook2 months ago

Facebook2 months agoFacebook and Instagram to Reduce Personalized Ads for European Users

-

Facebook3 months ago

Facebook3 months agoReclaim Your Account: Facebook and Instagram Launch New Hub for Account Recovery

-

Apple4 months ago

Apple4 months agoMeta discontinues Messenger apps for Windows and macOS